I offer deployment of your large language model utilizing RunPod IO pods, workers, or vLLM

-

Delivery Time2 Days

-

LanguagesEnglish, French

-

Location

Service Description

Convert a Large Language Model into a Production-Ready API

I will turn your HuggingFace or custom checkpoint into a fast serverless endpoint on RunPod, prepared for actual users within a few days.

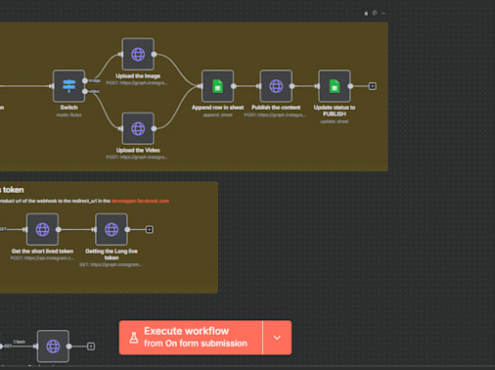

High-Quality Infrastructure using RUNPOD

Automatic scaling from zero to many GPU workers in less than a minute

No cold starts with a pre-warmed pool

Usage-based pricing for RTX4090 / A100 / H100 pods

Live metrics, notifications, and log collection

Continuous Integration/Continuous Deployment pipeline for simple redeployments

Demonstrated Capability With:

vLLM & TGI conversation APIs (over 70B parameters)

Retrieval-Augmented Generation backends with response times under 200ms

LoRA quick-swapping and 4-bit quantized models

Multi-region backup through Cloudflare

Reasons to Rely on This Service:

Experienced AI & Backend Engineer, contributor to vLLM

More than 50 RunPod setups with near-perfect uptime

Security-focused constructions: JWT, allowed IP lists, Infrastructure as Code

Optimization for performance, achieving first token latency below 50ms

Prepared for Deployment?

Contact me with your model link, expected traffic volume, and required region. I will respond quickly and deliver even faster. Let's get your LLM launched today!